Although artificial intelligence can help achieve wondrous things, it’s only as good as the information it’s learnt from. Tech Farmer looks at how synthetic imagery could take green-on-green precision to new levels.

Written by Lucy de la Pasture

Although per plant farming may have suffered a setback with the loss of the Small Robot Company, the vision for this ultimate precision in spray application is far from dead. While there are over 300 agricultural robotics companies globally and that number is increasing, uptake of a technology many view as progressive remains slow.

In the meantime, major players in the sprayer manufacture market have green-on-green solutions available or in the pipeline, all with the aim of significantly reducing the quantity of pesticides applied, paring costs and diminishing environmental impacts.

Current green-on-green technology is dependent on artificial intelligence (AI) which has been trained to recognise targets within a crop. This machine-learning process is underpinned by the analysis of huge data sets to develop algorithms relevant to different crops and the agronomy challenges faced. It’s a long and slow process, not just because of the time it takes to gather the imagery. Currently to get an accurate data set, cameras attempt to cover all possible scenarios such as growth stages, lighting and shadows, and then manually label every one of those images. A near impossible task and it’s these limitations of vision systems themselves that add to the challenge when training AI.

Alongside the big brands, specialist agri-tech companies are bringing some typically out-of-the box thinking, allowing more novel approaches to potentially disrupt the market. One of these is Suffolk -based AgriSynth, which aims to bring a highly accurate but lower-cost solution to the pinch point that all the AI-driven systems have – teaching the AI.

It was a Star Wars movie that triggered the idea that the AgriSynth concept has been built on, explains Colin Herbert, Agrisynth’s founder and CEO. Watching the movie, the computer-generated imagery struck him and then it just clicked that there was a way of making up images by using a computer to generate them.

With the seed of an idea, Colin began to research the field of synthetic imagery, which is where pictures are generated using computer graphics, simulation methods and AI to represent reality. To his surprise he found that although synthetic imagery was being developed in the medical field, for example, it wasn’t being looked at in agriculture.

The use of synthetic images in machine learning solves two major problems – image acquisition and labelling, explains Colin. “A raw image means nothing to AI software; it has to learn how to classify different objects in an image and this is done by a process of labelling.”

Traditionally, there are several ways to annotate images to teach AI systems. Bounding boxes are rectangles drawn in software around an object – a weed, a stone, and so on – and it’s a relatively quick and easy thing to do. But there are limitations when it comes to accuracy.

“Bounding boxes can’t account for things like an object in part shadow, leaves that are overlapping, or the leaves of a weed that are mostly hidden behind a weed of another species. It can be very difficult to see where one plant starts and another one finishes in many images, especially with grassweeds.

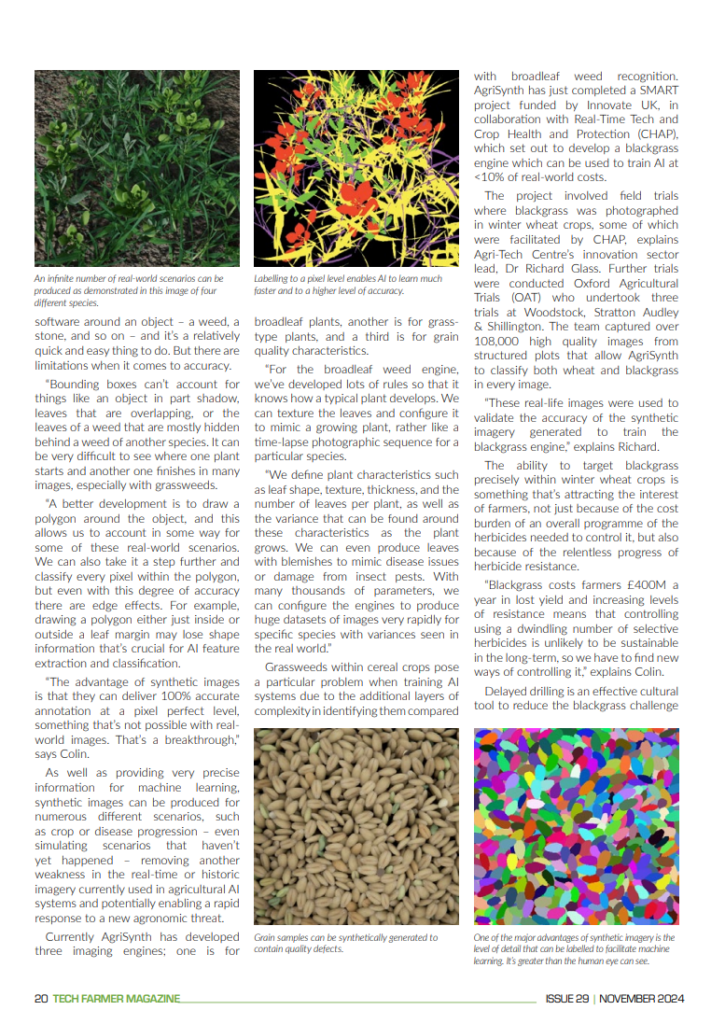

“A better development is to draw a polygon around the object, and this allows us to account in some way for some of these real-world scenarios. We can also take it a step further and classify every pixel within the polygon, but even with this degree of accuracy there are edge effects. For example, drawing a polygon either just inside or outside a leaf margin may lose shape information that’s crucial for AI feature extraction and classification.

“The advantage of synthetic images is that they can deliver 100% accurate annotation at a pixel perfect level, something that’s not possible with real-world images. That’s a breakthrough,” says Colin.

As well as providing very precise information for machine learning, synthetic images can be produced for numerous different scenarios, such as crop or disease progression – even simulating scenarios that haven’t yet happened – removing another weakness in the real-time or historic imagery currently used in agricultural AI systems and potentially enabling a rapid response to a new agronomic threat.

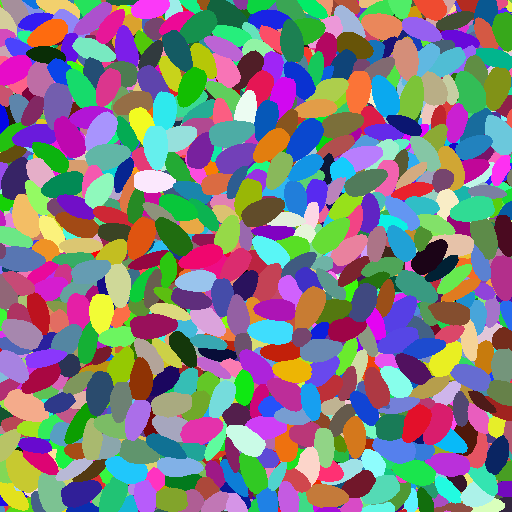

Currently AgriSynth has developed three imaging engines; one is for broadleaf plants, another is for grass-type plants, and a third is for grain quality characteristics.

“For the broadleaf weed engine, we’ve developed lots of rules so that it knows how a typical plant develops. We can texture the leaves and configure it to mimic a growing plant, rather like a time-lapse photographic sequence for a particular species.

“We define plant characteristics such as leaf shape, texture, thickness, and the number of leaves per plant, as well as the variance that can be found around these characteristics as the plant grows. We can even produce leaves with blemishes to mimic disease issues or damage from insect pests. With many thousands of parameters, we can configure the engines to produce huge datasets of images very rapidly for specific species with variances seen in the real world.”

Grassweeds within cereal crops pose a particular problem when training AI systems due to the additional layers of complexity in identifying them compared with broadleaf weed recognition. AgriSynth has just completed a SMART project funded by Innovate UK, in collaboration with Real-Time Tech and Crop Health and Protection (CHAP), which set out to develop a blackgrass engine which can be used to train AI at <10% of real-world costs.

The project involved field trials where blackgrass was photographed in winter wheat crops, some of which were facilitated by CHAP, explains Agri-Tech Centre’s innovation sector lead, Dr Richard Glass. Further trials were conducted Oxford Agricultural Trials (OAT) who undertook three trials at Woodstock, Stratton Audley & Shillington. The team captured over 108,000 high quality images from structured plots that allow AgriSynth to classify both wheat and blackgrass in every image.

“These real-life images were used to validate the accuracy of the synthetic imagery generated to train the blackgrass engine,” explains Richard.

The ability to target blackgrass precisely within winter wheat crops is something that’s attracting the interest of farmers, not just because of the cost burden of an overall programme of the herbicides needed to control it, but also because of the relentless progress of herbicide resistance.

“Blackgrass costs farmers £400M a year in lost yield and increasing levels of resistance means that controlling using a dwindling number of selective herbicides is unlikely to be sustainable in the long-term, so we have to find new ways of controlling it,” explains Colin.

Delayed drilling is an effective cultural tool to reduce the blackgrass challenge but changing weather patterns are upping the risk of not getting a crop in when drilling into October. The pressure is growing to plant wheat in September to make sure a crop is established before it’s too wet.

The ability to target blackgrass precisely opens the door to using non-selective herbicides, such as glyphosate, or even herbicides not normally used in the crop. These methods are already practiced in Australia to help control resistant ryegrass.

“By precision, I mean the ability to target an area of 5mm x 5mm and using the synthetic imagery developed in this project, we can clearly recognise, distinguish and classify blackgrass in wheat in a precise pixel-level manner,” explains Colin.

“We’ve developed a targeting system where we can alter the resolution of the treatment from 25mm down to 1mm depending on the grid system we overlay. This gives us the ability to be more aggressive if there’s lots of blackgrass and the farmer could accept losing say 10% of the crop in these areas if an active such as glyphosate was applied.”

The company is now ready to develop the hardware to square the circle and make such precise applications a reality. “We’re looking at the concept of what would essentially be an inkjet printer or micro-spray system for crops,” he adds.

Blackgrass is the first use case and further development within the project will enable other species to be classified in a wide range of conditions.

AgriSynth has also worked with a Canadian company to develop a grain ‘engine’ which will be used to help tackle the problem of grain quality, explains Colin. “Canadian red wheat has about 85 quality characteristics, including things such as chalky grains, broken grains, fusarium grains and green grains. And depending on the profile of those characteristics, it will affect the value of the wheat,” he explains.

“The grain trading process, end to end, is based on sampling the grain, assessing its quality and coming up with a price. And currently the farmer doesn’t have much say in that. The quality assessment is carried out by skilled humans that train for 6-7 years but it’s still subjective, based on the quality of the sampling and generally funded by the buyer.”

Synthetic imagery could improve the accuracy of the assessment and because some quality characteristics are rare, it’s perfectly placed to produce a data set to train AI that would take years using real life imagery, believes Colin.

“We’ve produced these images and trained a model on some of the characteristics and can then link to a camera on the combine to take images of the grain as it comes into the tank. Assessing the quality on the farm in this way could change the whole value chain of grain trading and that’s what we’re trying to achieve.”

Colin’s realistic that these changes won’t happen overnight and will meet resistance from the grain trade but he’s confident that over time, this information will be to the benefit of growers when it comes to selling their produce. And it’s just one example of many different crops where it would be to the grower’s advantage to adopt a similar approach.

“Vertical farming is an example where we could produce synthetic datasets to be certain the produce is blemish-free and of perfect quality for the supermarkets,” he adds.

So far AgriSynth is only touching the tip of the iceberg of what could be possible using synthetic imagery in agriculture – part of the company’s ethos is to stimulate innovation by making AI and machine learning more accessible to researchers and other companies.

The concept also extends to any area where subjective assessments are made by humans, such as those made in replicated small plot trials. “Identifying the percentage of a leaf infected by a disease is a skilled job but it’s subjective. Differentiating between two types of one disease is very skilled. Synthetic imagery opens the door to the whole of R&D being camera-enabled and objective, rather than human-enabled and subjective.

“And with billions of research plots around the world, that is a potential gamer-changer in the way we develop a range of solutions,” concludes Colin.

Green-on-green in practice

One of the companies at the forefront of bringing green-on-green technology to the field is French company Bilberry, bought by Trimble in 2022. With algorithms well-developed for crops in Australia and the US, the UK market is more of a challenge with its crops predominantly drilled at relatively narrow row spacing.

Keen to assess the technology early doors, Dyson Farming Research added the Bilberry system to its new Dammann Profi Class self-propelled sprayer. Since its arrival in September 2020, the team – led by trainee agronomist George Mills, who was previously a sprayer operator for the company – has been assessing its capabilities and helping Bilberry build an image database to further develop and refine its set of algorithms for the UK.

The Bilberry system is capable of green-on-brown and green-on-green applications. The green-on-brown gives the ability to spot spray weeds in stubbles so has primarily been used with glyphosate to create stale seedbeds in the autumn. It’s a capability that George rates as ‘really successful’ and has a lot of confidence in using.

When it comes to green-on-green, the algorithms are still limited but the Bilberry set-up currently has the capability of spotting broadleaf weeds, grassweeds in oilseed rape, any weeds in maize, and broadleaf weeds in grassland.

“Developing the algorithms is very slow because it requires images to be taken in the field, which then have to be labelled by a human to enable the AI to learn for itself,” says George.

The Dyson team has helped Bilberry with algorithm development by sending images to Bilberry as the sprayer goes through crops. “The cameras take images every 30 seconds as the sprayer goes through a field and these are stored on a hard drive in the sprayer which we later download and send to France for algorithm learning,” he explains.

The 36m sprayer boom has RGB cameras mounted above it at 3m spacing, which means that when the booms are folded the cameras are above the cab. “It’s one of the drawbacks we’ve found with the system,” says George. “The cameras are vulnerable because of where they’re mounted and you have to be very careful not to damage them accidentally. If a camera needs repair, it has to go to France which isn’t a quick process and means the sprayer may have to work with a camera missing.”

It’s fair to say green-on-green application has been a learning experience and success has been mixed. “We’ve targeted volunteer potatoes in winter wheat with fluroxypyr between the T1 and T2 timings which worked to some extent for volunteers that were showing above the canopy. The drawback is shading which meant it wasn’t possible for the AI to spot most volunteer potatoes below the canopy – these had a 90-95% miss rate.”

The extra pass needed also highlights another downside of green-on-green applications, he highlights. “Generally, spot spraying herbicides means an extra pass because we’re targeting the whole crop when we’re applying fungicides and PGRs. The cameras also require good light levels to function which may mean missed opportunities early or late in the day.”

The farm grows oats and vetch for its anaerobic digester and a problem with charlock in a field provided an opportunity to test the broadleaf weed algorithm. “It’s possible to set a threshold for the algorithm to trigger a spot application and we used this to find out whether we could target charlock by its larger size, hopefully limiting any damage to the smaller leaved vetch. By setting the threshold at 60%, we managed to take out the largest charlock and although we did sacrifice some vetch it was at an acceptable level.”

Testing the grassweeds in OSR algorithm has had limited testing, admits George, but the system has really come into its own for weeds in maize. “It’s easy for the camera to see weeds in the maize crop and we’ve found a 90% hit rate on flagged weeds when we’ve conducted efficiency tests. With a potential 60-90% saving in herbicide costs, we’ll be using the system as much as is logistically possible in maize.”

It’s the degree of those potential savings in herbicide costs that need to be considered carefully before investing in a green-on-green system. “The Bilberry set up on the sprayer cost around £100,000 in 2020. – the system itself was around £70,000 and additionally we had to specify options on the sprayer that we wouldn’t otherwise have needed.”

There are lots of advantages to having the Bilberry system over and above the potential to reduce pesticide use and the environmental benefits that brings, says George. “Spot spraying could be a way of increasing the longevity of active ingredients and the sprayer components aren’t working as hard as when broadcast spraying, potentially meaning sprayers could have longer lives or a move to a smaller machine may be feasible.”

For many growers, the capability to spot treat blackgrass would offer the biggest potential savings in herbicide but George reckons it’s still not close to becoming a commercial reality.

“Bilberry has developed an algorithm for grassweeds in cereal crops, but it relies on weeds being visible above the canopy. That’s too late for treating blackgrass in the UK as we’re applying chemistry in the autumn when the blackgrass is still very small.”

Although it’s still early days for green-on-green technology, the degree of development in this area is a promising sign that any current weaknesses may soon be overcome.